Machine Learning & Analytics Projects

We deliver end-to-end technology solutions that drive innovation and create lasting business value.

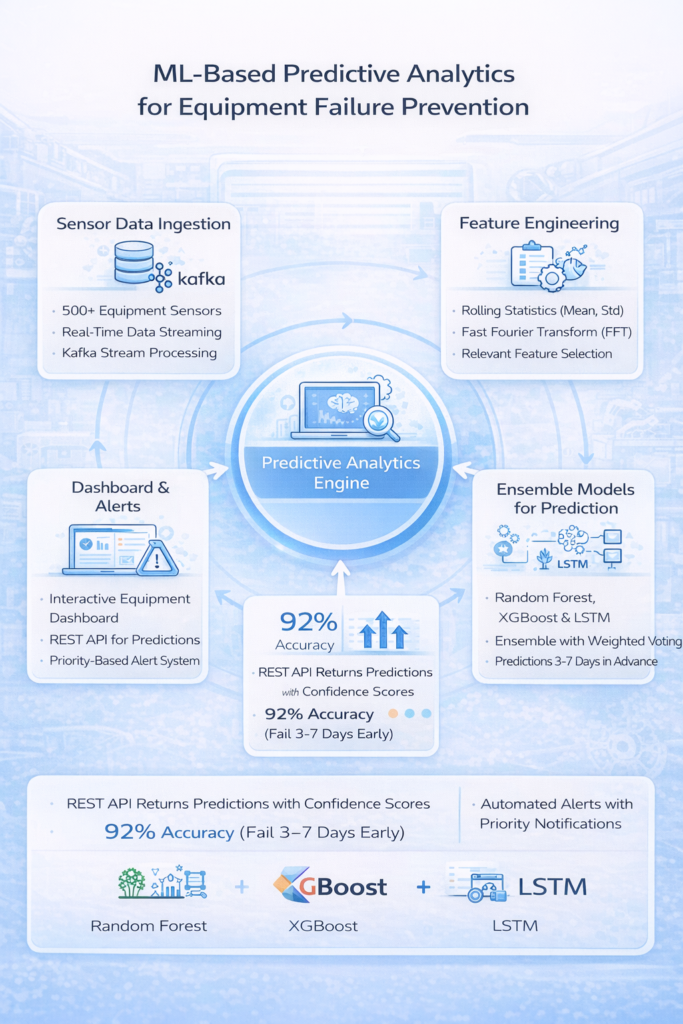

Predictive Maintenance System for Manufacturing

Technology Stack: Python, Scikit-learn, TensorFlow, FastAPI, React, PostgreSQL, Apache Kafka, Docker

Description: Built ML-based predictive analytics engine that detects equipment failure risks using real-time sensor data from manufacturing equipment. The system processes time-series data from 500+ sensors, applies feature engineering with rolling statistics and FFT analysis, and uses ensemble models (Random Forest, XGBoost, LSTM) to predict failures 3-7 days in advance. Implemented real-time data ingestion via Kafka with stream processing and multi-model ensemble with weighted voting for robust predictions. Features REST API for predictions with confidence scores, interactive dashboard for equipment health monitoring, and automated alerting system with priority-based notifications. Achieved 92% accuracy in failure prediction and reduced unplanned downtime by 40%, saving $2.1M annually in avoided downtime and maintenance costs.

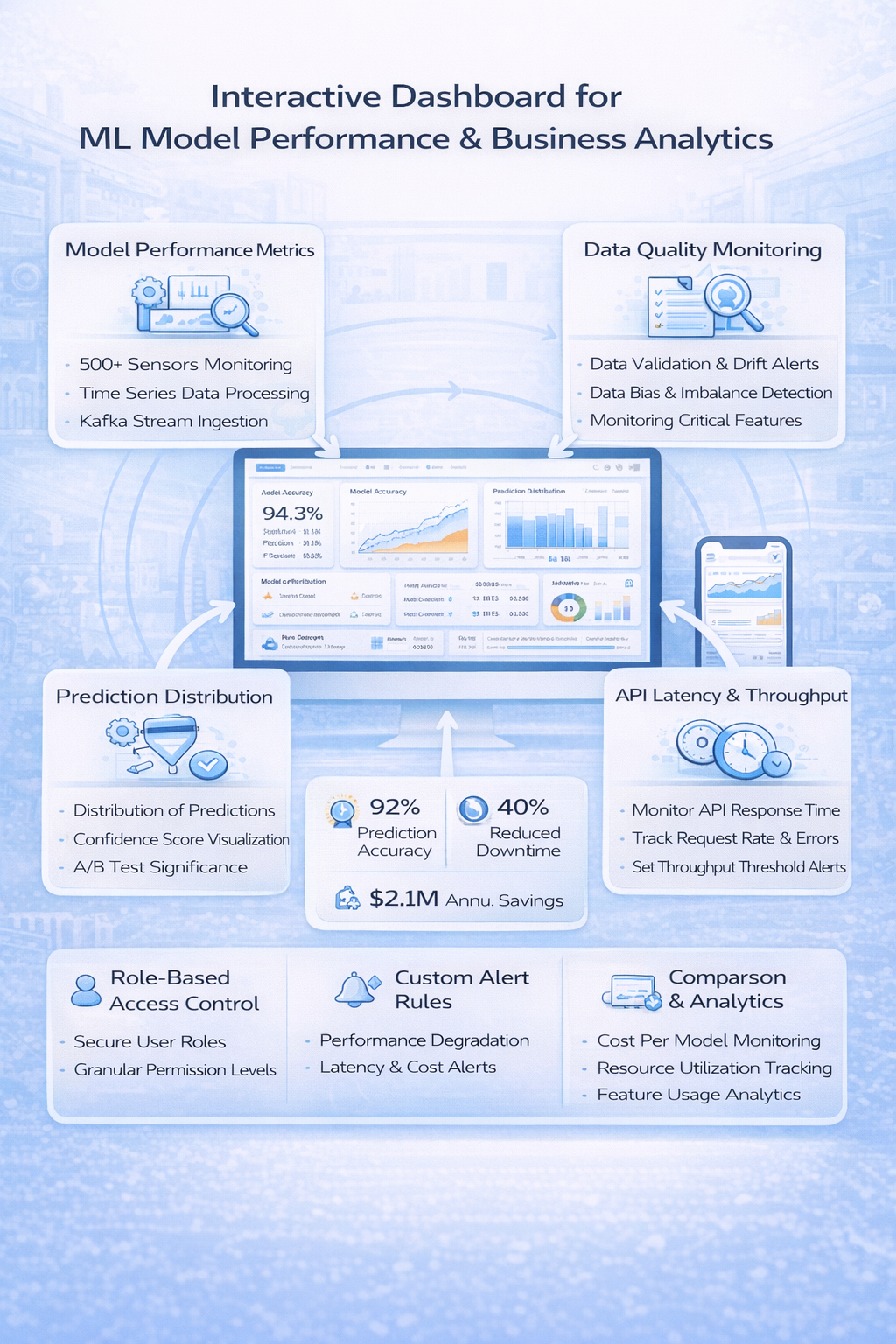

Enterprise AI Dashboard & Model Performance Monitoring

Technology Stack: React, Node.js, Flask, Python, MongoDB, Grafana, Prometheus, Docker

Created interactive dashboard for visualizing ML model performance, data quality metrics, and business KPIs in real-time, supporting 10,000+ daily users with role-based access. The platform provides comprehensive model insights including performance metrics (accuracy, precision, recall, F1-score) with trend analysis, prediction distribution and confidence score visualization and A/B test results with statistical significance calculation. Implemented automated model drift detection using statistical tests and data quality monitoring with alerting capabilities. Features custom alert rules for performance degradation, API latency and throughput monitoring, cost tracking per model and feature usage analytics. Enables teams to track model performance over time, compare different model versions and make data-driven decisions for model improvements and deployments.