AI Platform

We deliver end-to-end technology solutions that drive innovation and create lasting business value.

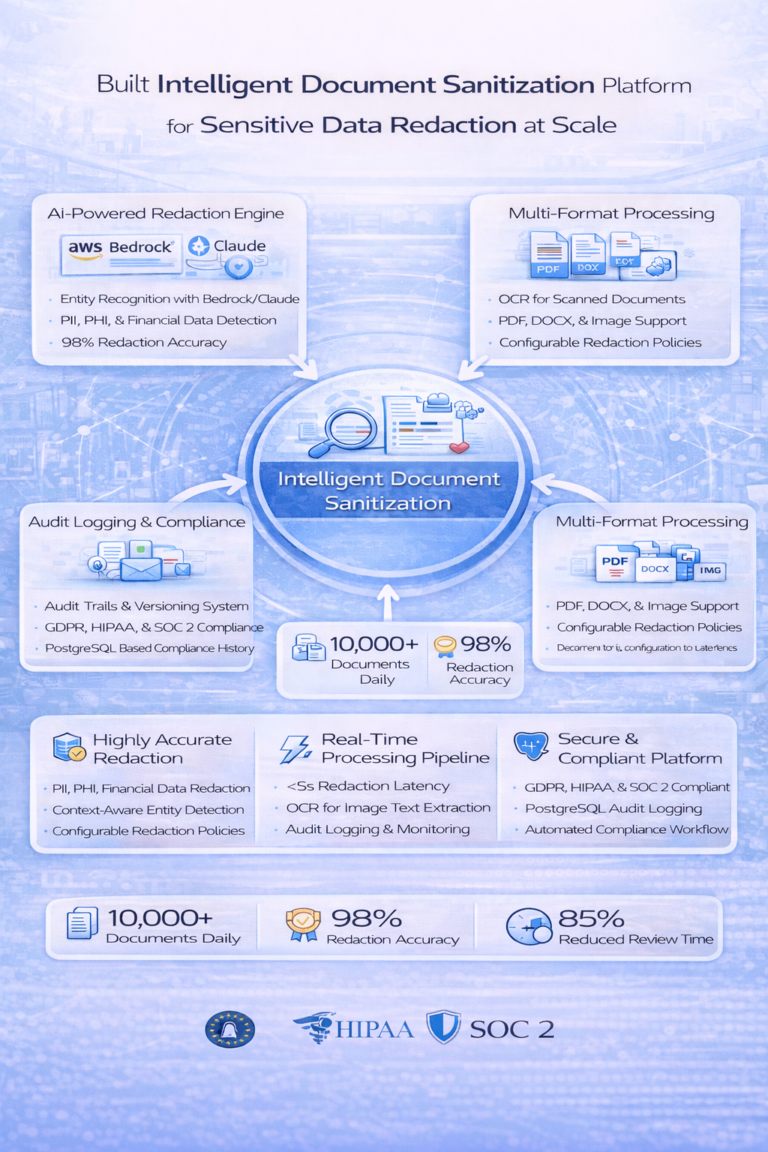

Enterprise Document Sanitization & PII Redaction System

Technology Stack: Python, AWS Bedrock (Claude), PostgreSQL, Docker, Kubernetes, Redis, AWS S3

Developed intelligent document sanitization platform that automatically detects and redacts sensitive information (PII, PHI, financial data) from enterprise documents at scale. The system leverages AWS Bedrock’s Claude models for context-aware entity recognition and redaction, processing 10,000+ documents daily with 98% accuracy. Supports multi-format processing including PDF, DOCX and images via OCR with configurable redaction policies per document type. Implemented versioning system with PostgreSQL to maintain audit trails and compliance history, ensuring GDPR, HIPAA and SOC 2 compliance. Features real-time processing pipeline with under 5-second latency, comprehensive audit logging, RESTful API for seamless integration and version control for document tracking. Reduced manual review time by 85% and enabled automated compliance workflows across the organization.

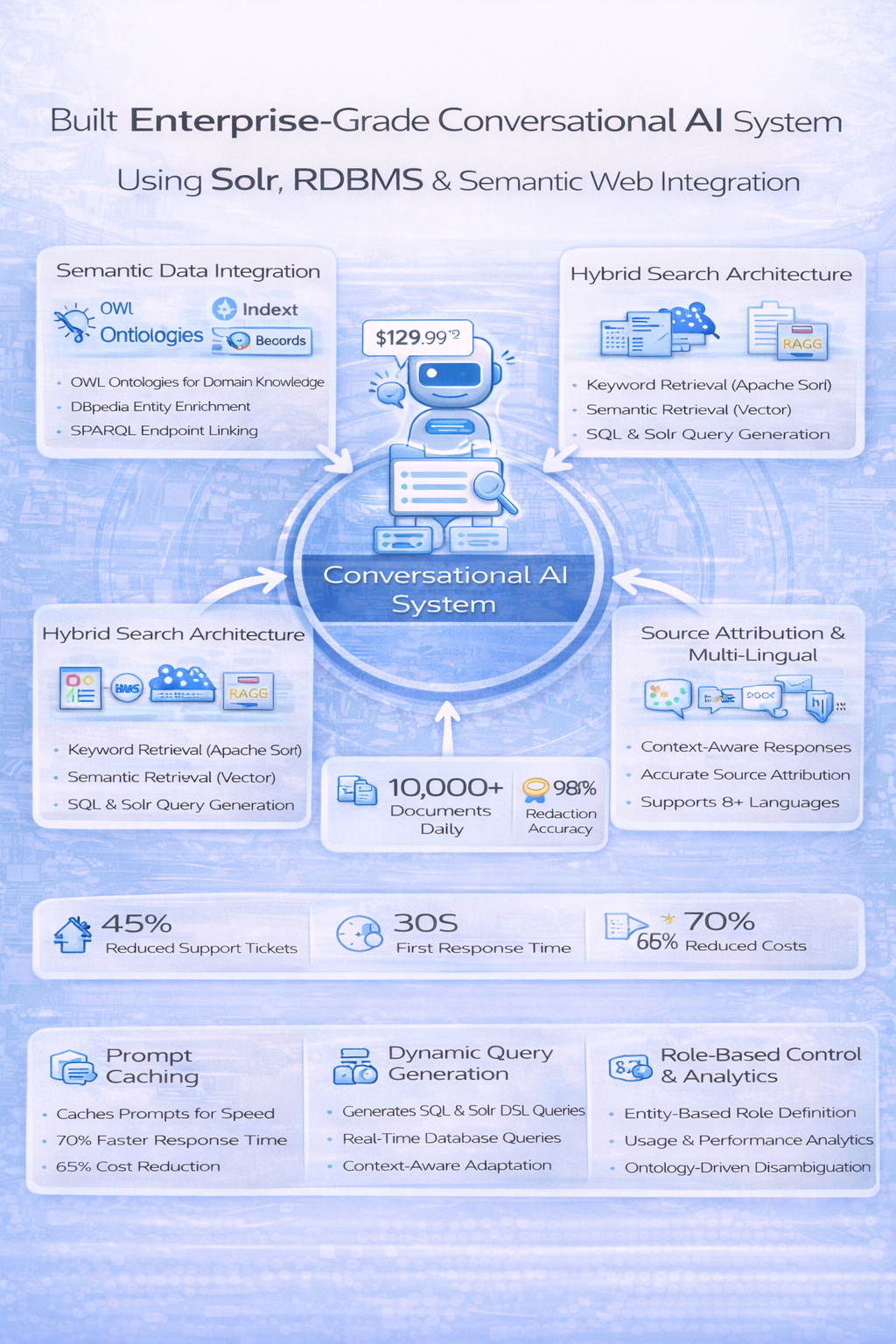

Intelligent Knowledge Base Chatbot with AWS Bedrock

Technology Stack: , AWS Bedrock (Claude Sonnet 4), LangChain, Apache Solr, PostgreSQL, Vector DB (Pinecone), FastAPI, React, OWL/RDF, DBpedia SPARQL

Built enterprise-grade conversational AI system providing intelligent responses by querying structured data from Apache Solr indexes and relational databases. Implemented semantic web integration using OWL ontologies for domain knowledge representation and DBpedia SPARQL endpoint for entity enrichment and linking. The chatbot uses AWS Bedrock’s Claude models with RAG (Retrieval Augmented Generation) architecture, combining semantic search across 50M+ indexed records with real-time database queries and ontology-based reasoning. Features hybrid search combining keyword (Solr) and semantic (vector) retrieval, dynamic query generation for SQL and Solr DSL, context-aware responses with source attribution and multi-lingual support (8+ languages). Implemented prompt caching to reduce latency by 70% and costs by 65%. Includes role-based access control, analytics dashboard for usage patterns and ontology-driven entity disambiguation. Reduced customer support tickets by 45% and improved first-response time from 4 hours to under 30 seconds.

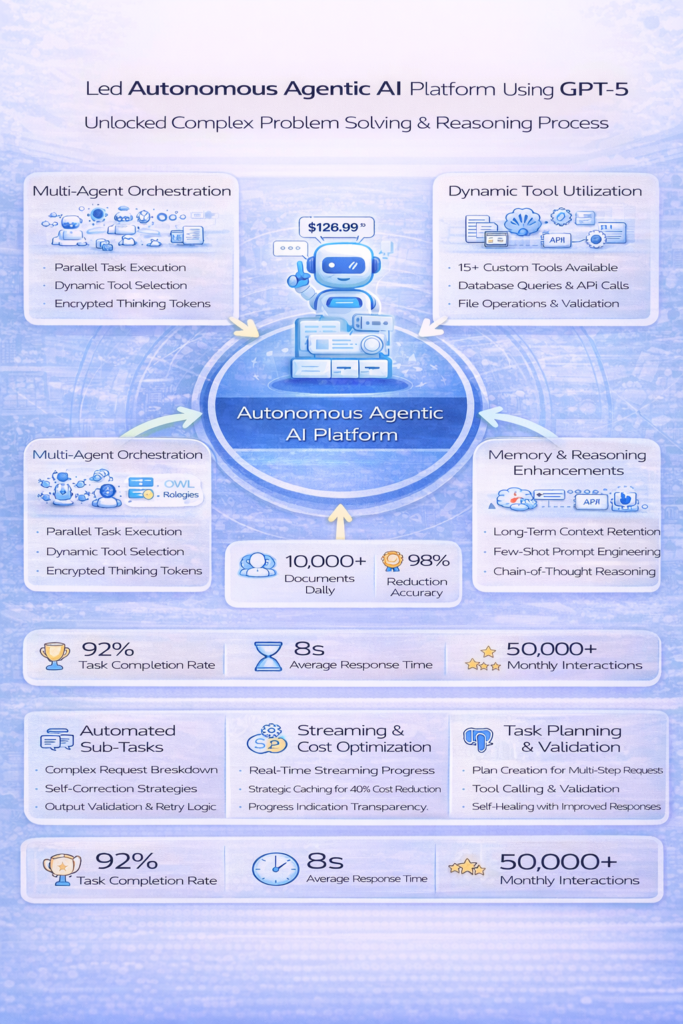

Agentic AI Migration: GPT-5 Powered Autonomous Assistant

Technology Stack: Python, Azure OpenAI GPT-5, LangChain, LangGraph, Azure Functions, Kubernetes, Redis, PostgreSQL

Led architectural transformation of traditional conversational AI system into autonomous agentic AI platform using GPT-5 Responses API with encrypted thinking tokens. The system features multi-step reasoning with self-correction capabilities, dynamic tool selection from 15+ custom tools including database queries, API integrations and file operations. Implemented multi-agent orchestration for parallel task execution, tool calling with automatic parameter validation and retry logic and memory management system for long-term context retention. Used advanced prompt engineering with few-shot examples and chain-of-thought reasoning for complex problem solving. Features real-time streaming responses with progress indicators and cost optimization achieving 40% reduction through strategic caching. The agent autonomously breaks down complex user requests into executable sub-tasks, validates outputs and provides comprehensive responses with full transparency into reasoning process. Achieved 92% task completion rate for complex multi-step queries with average response time of 8 seconds for 5-step reasoning chains, handling 50,000+ monthly interactions with 4.7/5 user satisfaction rating.

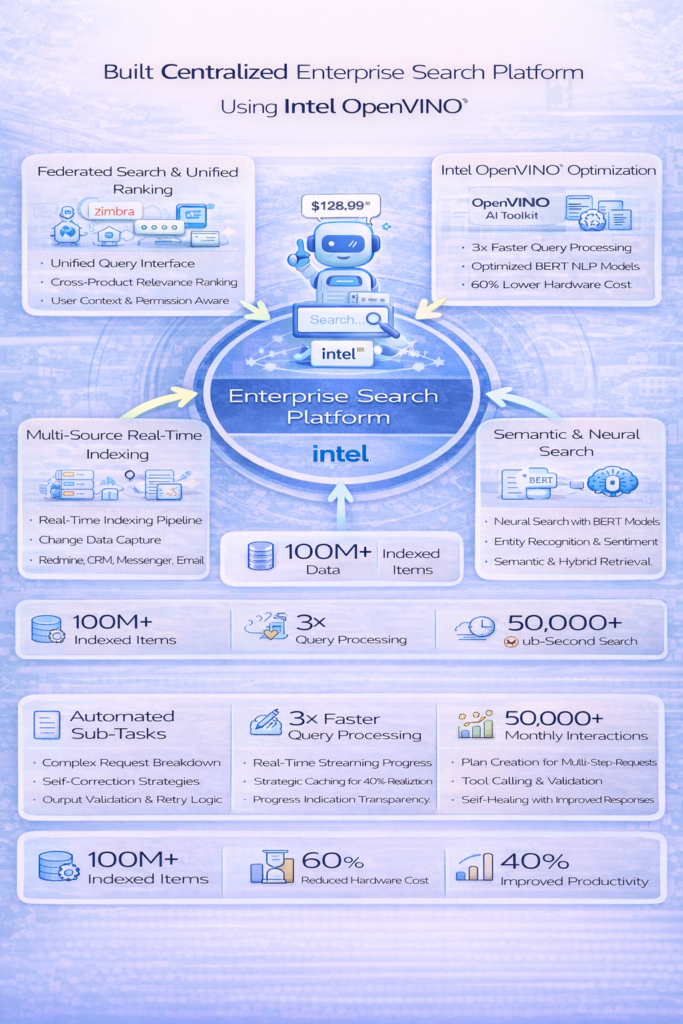

Unified Enterprise Search Platform with AI Acceleration (Intel-Sponsored)

Technology Stack: Python, Apache Solr, Elasticsearch, OpenVINO, React, Redis, PostgreSQL, Zimbra API, Prosody XMPP, Redmine API, Docker

Developed centralized search platform providing unified search experience across multiple enterprise products including Zimbra email, Prosody-based messenger, CRM system, and Redmine project management. Integrated Intel OpenVINO toolkit for AI model optimization and inference acceleration, achieving 3x faster query processing and semantic search. The system indexes data from heterogeneous sources in real-time, providing single search interface with cross-product relevance ranking. Implemented neural search capabilities using OpenVINO-optimized BERT models for query understanding and document embedding. Features include federated search with unified ranking algorithm, real-time indexing with change data capture, personalized search results based on user context, permissions and advanced filters for each product type. Used OpenVINO for accelerating NLP models (entity recognition, sentiment analysis, text classification) on CPU infrastructure, reducing hardware costs by 60%. Supports full-text search, semantic search, and hybrid retrieval modes. Achieved sub-200ms search latency for queries across 100M+ indexed items and improved user productivity by 40% through faster information discovery. Project was sponsored and technically validated by Intel.